2019.02.22

Tutorial 2 -Industry Practices and Tools

01.What is the need for VCS ?

Version control systems are a category of software tools that help a software team manage changes to source code over time. Version control software keeps track of every modification to the code in a special kind of database. If a mistake is made, developers can turn back the clock and compare earlier versions of the code to help fix the mistake while minimizing disruption to all team members.

Version control helps teams solve these kinds of problems, tracking every individual change by each contributor and helping prevent concurrent work from conflicting. Changes made in one part of the software can be incompatible with those made by another developer working at the same time. This problem should be discovered and solved in an orderly manner without blocking the work of the rest of the team. Further, in all software development, any change can introduce new bugs on its own and new software can't be trusted until it's tested. So testing and development proceed together until a new version is ready.

Developing software without using version control is risky, like not having backups. Version control can also enable developers to move faster and it allows software teams to preserve efficiency and agility as the team scales to include more developers.

02.Differentiate the three models of VCSs, stating their pros and cons?

Local Version control system

03.Git and GitHub, are they same or different? Discuss with facts.

03.Git and GitHub, are they same or different? Discuss with facts.

No..its not same..git is a version control system that provides git hub to operate

Git is a revision control system, a tool to manage your source code history. GitHub is a hosting service for Git repositories. So they are not the same thing:Git is the tool, GitHub is the service for projects that use Git

04.Compare and contrast the Git commands, commit and push?

Since git is a distributed version control system, the difference is that commit will commit changes to your local repository, whereas push will push changes up to a remote repository. git commit record your changes to the local repository. git push update the remote repository with your local changes

05.Discuss the use of staging area and Git directory

The basic Git workflow goes something like this: You modify files in your working directory. You stage the files, adding snapshots of them to your staging area. You do a commit, which takes the files as they are in the staging area and stores that snapshot permanently to your Git directory

06.Explain the collaboration workflow of Git, with example?

collaboration work flow has 6 steps.

1.Establish goals

2.Establish Metrics and benchmarks

3.Setup baseline

4.Study design concepts

5.Study HVAC options and sizing

6.optimize

Gitflow Workflow is a Git workflow design that was first published and made popular by Vincent Driessen at nvie. The Gitflow Workflow defines a strict branching model designed around the project release. This provides a robust framework for managing larger projects.

07. Discuss the benefits of CDNs ?

• Improving website load times -

By distributing content closer to

website visitors by using a nearby CDN server (among other

optimizations), visitors experience faster page loading times. As visitors

are more inclined to click away from a slow-loading site, a CDN can

reduce bounce rates and increase the amount of time that people spend

on the site. In other words, a faster a website means more visitors will

stay and stick around longer.

• Reducing bandwidth costs -

Bandwidth consumption costs for website

hosting is a primary expense for websites. Through caching and other

optimizations, CDNs are able to reduce the amount of data an origin

server must provide, thus reducing hosting costs for website owners.

• Increasing content availability and redundancy -

Large amounts of

traffic or hardware failures can interrupt normal website function. Thanks

to their distributed nature, a CDN can handle more traffic and withstand

hardware failure better than many origin servers.

• Improving website security - A CDN may improve security by

providing DDoS mitigation, improvements to security certificates, and

other optimizations.

08.How CDNs differ from web hosting servers?

Differences Between CDNs and Web Hosting. Web Hosting is used to host your website on a server and let users access it over the internet. ... Web Hosting normally refers to one server. A content delivery network refers to a global network of edge servers which distributes your content from a multi-host environment

09.Identify free and commercial CDNs

Commercial CDNs

Many large websites use commercial CDNs like Akamai Technologies to cache their web pages around the world. A website that uses a commercial CDN works the same way. The first time a page is requested, by anyone, it is built from the web server. But then it is also cached on the CDN server. Then when another customer comes to that same page, first the CDN is checked to determine if the cache is up-to-date. If it is, the CDN delivers it, otherwise, it requests it from the server again and caches that copy.

A commercial CDN is a very useful tool for a large website that gets millions of page views, but it might not be cost effective for smaller websites.

Virtualization describes a technology in which an application, guest operating system or data storage is abstracted away from the true underlying hardware or software. A key use of virtualization technology is server virtualization, whichuses a software layer called a hypervisor to emulate the underlying hardware

There is a gap between development and

implementation environments

•Different platforms

•Missing dependencies, frameworks/ runtimes

•Wrong configurations

•Version mismatches

11.Discuss and compare the pros and cons of different virtualization techniques in different

levels

Guest Operating System Virtualization

Guest OS virtualization is perhaps the easiest concept to understand. In this scenario the physical host computer system runs a standard unmodified operating system such as Windows, Linux, Unix or MacOS X. Running on this operating system is a virtualization application which executes in much the same way as any other application such as a word processor or spreadsheet would run on the system.

Shared Kernel Virtualization

Shared kernel virtualization (also known as system level or operating system virtualization) takes advantage of the architectural design of Linux and UNIX based operating systems. In order to understand how shared kernel virtualization works it helps to first understand the two main components of Linux or UNIX operating systems. At the core of the operating system is the kernel. The kernel, in simple terms, handles all the interactions between the operating system and the physical hardware. The second key component is the root file system which contains all the libraries, files and utilities necessary for the operating system to function. Under shared kernel virtualization the virtual guest systems each have their own root file system but share the kernel of the host operating system.

Kernel Level Virtualization

Under kernel level virtualization the host operating system runs on a specially modified kernel which contains extensions designed to manage and control multiple virtual machines each containing a guest operating system. Unlike shared kernel virtualization each guest runs its own kernel, although similar restrictions apply in that the guest operating systems must have been compiled for the same hardware as the kernel in which they are running. Examples of kernel level virtualization technologies include User Mode Linux (UML) and Kernel-based Virtual Machine (KVM).

12.Identify popular implementations and available tools for each level of visualization

Data visualization's central role in advanced analytics applications includes uses in planning and developing predictive models as well as reporting on the analytical results they produce.

13. What is the hypervisor and what is the role of it?

Hypervisor. A hypervisor or virtual machine monitor (VMM) is computer software, firmware or hardware that creates and runs virtual machines. ... The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems.

14.How does the emulation is different from VMs?

Virtual machines make use of CPU self-virtualization, to whatever extent it exists, to provide a virtualized interface to the real hardware. Emulators emulate hardware without relying on the CPU being able to run code directly and redirect some operations to a hypervisor controlling the virtual container.

Tutorial 2 -Industry Practices and Tools

01.What is the need for VCS ?

Version control systems are a category of software tools that help a software team manage changes to source code over time. Version control software keeps track of every modification to the code in a special kind of database. If a mistake is made, developers can turn back the clock and compare earlier versions of the code to help fix the mistake while minimizing disruption to all team members.

Version control helps teams solve these kinds of problems, tracking every individual change by each contributor and helping prevent concurrent work from conflicting. Changes made in one part of the software can be incompatible with those made by another developer working at the same time. This problem should be discovered and solved in an orderly manner without blocking the work of the rest of the team. Further, in all software development, any change can introduce new bugs on its own and new software can't be trusted until it's tested. So testing and development proceed together until a new version is ready.

Developing software without using version control is risky, like not having backups. Version control can also enable developers to move faster and it allows software teams to preserve efficiency and agility as the team scales to include more developers.

02.Differentiate the three models of VCSs, stating their pros and cons?

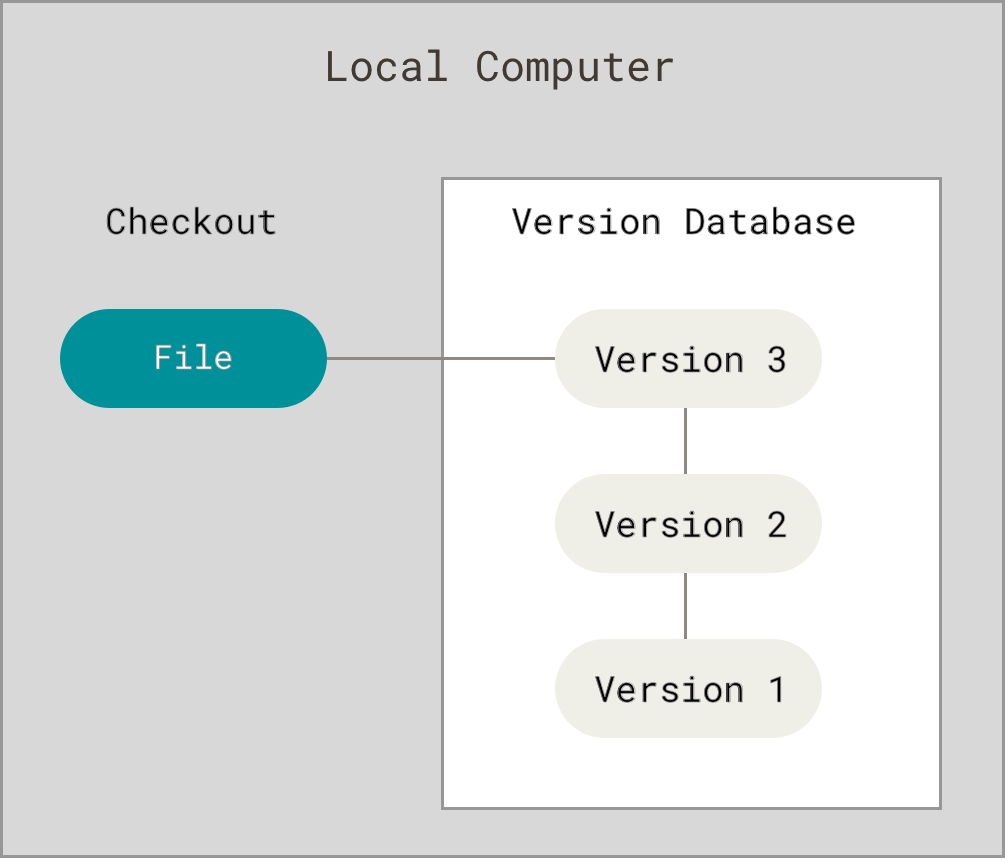

Local Version control system

Many people’s version-control method of choice is to copy files into another directory (perhaps a time-stamped directory, if they’re clever). This approach is very common because it is so simple, but it is also incredibly error prone. It is easy to forget which directory you’re in and accidentally write to the wrong file or copy over files you don’t mean to.

To deal with this issue, programmers long ago developed local VCSs that had a simple database that kept all the changes to files under revision control.

One of the more popular VCS tools was a system called RCS, which is still distributed with many computers today. RCS works by keeping patch sets (that is, the differences between files) in a special format on disk; it can then re-create what any file looked like at any point in time by adding up all the patches.

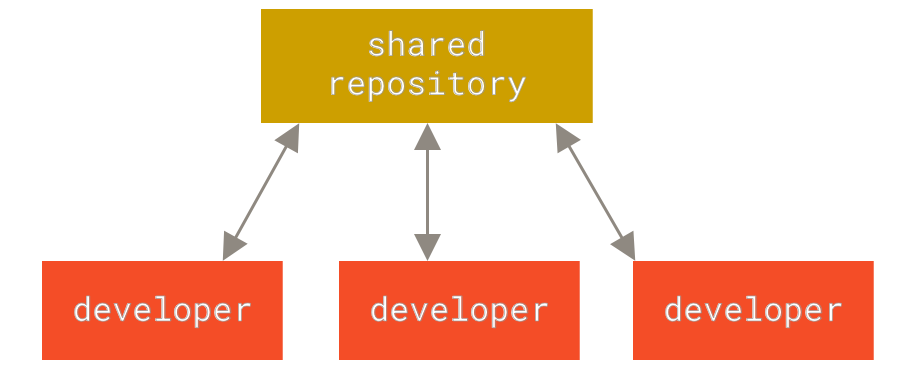

Centralized Version Control Systems

The next major issue that people encounter is that they need to collaborate with developers on other systems. To deal with this problem, Centralized Version Control Systems (CVCSs) were developed. These systems (such as CVS, Subversion, and Perforce) have a single server that contains all the versioned files, and a number of clients that check out files from that central place. For many years, this has been the standard for version control.

Figure 2. Centralized version control.

This setup offers many advantages, especially over local VCSs. For example, everyone knows to a certain degree what everyone else on the project is doing. Administrators have fine-grained control over who can do what, and it’s far easier to administer a CVCS than it is to deal with local databases on every client.

However, this setup also has some serious downsides. The most obvious is the single point of failure that the centralized server represents. If that server goes down for an hour, then during that hour nobody can collaborate at all or save versioned changes to anything they’re working on. If the hard disk the central database is on becomes corrupted, and proper backups haven’t been kept, you lose absolutely everything — the entire history of the project except whatever single snapshots people happen to have on their local machines. Local VCS systems suffer from this same problem — whenever you have the entire history of the project in a single place, you risk losing everything.

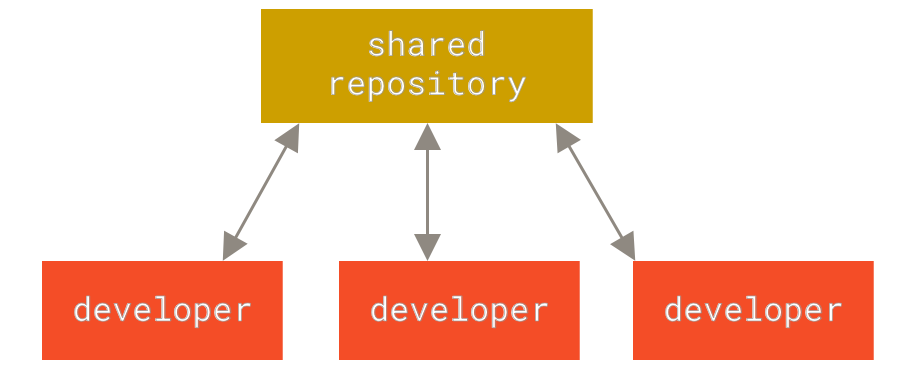

Distributed Version Control Systems

This is where Distributed Version Control Systems (DVCSs) step in. In a DVCS (such as Git, Mercurial, Bazaar or Darcs), clients don’t just check out the latest snapshot of the files; rather, they fully mirror the repository, including its full history. Thus, if any server dies, and these systems were collaborating via that server, any of the client repositories can be copied back up to the server to restore it. Every clone is really a full backup of all the data.

Figure 3. Distributed version control.

Furthermore, many of these systems deal pretty well with having several remote repositories they can work with, so you can collaborate with different groups of people in different ways simultaneously within the same project. This allows you to set up several types of workflows that aren’t possible in centralized systems, such as hierarchical models.

No..its not same..git is a version control system that provides git hub to operate

Git is a revision control system, a tool to manage your source code history. GitHub is a hosting service for Git repositories. So they are not the same thing:Git is the tool, GitHub is the service for projects that use Git

04.Compare and contrast the Git commands, commit and push?

Since git is a distributed version control system, the difference is that commit will commit changes to your local repository, whereas push will push changes up to a remote repository. git commit record your changes to the local repository. git push update the remote repository with your local changes

05.Discuss the use of staging area and Git directory

The basic Git workflow goes something like this: You modify files in your working directory. You stage the files, adding snapshots of them to your staging area. You do a commit, which takes the files as they are in the staging area and stores that snapshot permanently to your Git directory

06.Explain the collaboration workflow of Git, with example?

collaboration work flow has 6 steps.

1.Establish goals

2.Establish Metrics and benchmarks

3.Setup baseline

4.Study design concepts

5.Study HVAC options and sizing

6.optimize

Gitflow Workflow is a Git workflow design that was first published and made popular by Vincent Driessen at nvie. The Gitflow Workflow defines a strict branching model designed around the project release. This provides a robust framework for managing larger projects.

07. Discuss the benefits of CDNs ?

• Improving website load times -

By distributing content closer to

website visitors by using a nearby CDN server (among other

optimizations), visitors experience faster page loading times. As visitors

are more inclined to click away from a slow-loading site, a CDN can

reduce bounce rates and increase the amount of time that people spend

on the site. In other words, a faster a website means more visitors will

stay and stick around longer.

• Reducing bandwidth costs -

Bandwidth consumption costs for website

hosting is a primary expense for websites. Through caching and other

optimizations, CDNs are able to reduce the amount of data an origin

server must provide, thus reducing hosting costs for website owners.

• Increasing content availability and redundancy -

Large amounts of

traffic or hardware failures can interrupt normal website function. Thanks

to their distributed nature, a CDN can handle more traffic and withstand

hardware failure better than many origin servers.

• Improving website security - A CDN may improve security by

providing DDoS mitigation, improvements to security certificates, and

other optimizations.

- Performance: reduced latency and minimized packet loss

- Scalability: automatically scale up for traffic spikes

- SEO Improvement: benefit from the Google SEO ranking factor

- Reliability: automatic redundancy between edge servers

- Lower Costs: save bandwidth with your web host

- Security: KeyCDN mitigates DDoS attacks on edge servers

08.How CDNs differ from web hosting servers?

Differences Between CDNs and Web Hosting. Web Hosting is used to host your website on a server and let users access it over the internet. ... Web Hosting normally refers to one server. A content delivery network refers to a global network of edge servers which distributes your content from a multi-host environment

09.Identify free and commercial CDNs

Commercial CDNs

Many large websites use commercial CDNs like Akamai Technologies to cache their web pages around the world. A website that uses a commercial CDN works the same way. The first time a page is requested, by anyone, it is built from the web server. But then it is also cached on the CDN server. Then when another customer comes to that same page, first the CDN is checked to determine if the cache is up-to-date. If it is, the CDN delivers it, otherwise, it requests it from the server again and caches that copy.

A commercial CDN is a very useful tool for a large website that gets millions of page views, but it might not be cost effective for smaller websites.

- Chrome Frame

- Dojo Toolkit

- Ext JS

- jQuery

- jQuery UI

- MooTools

- Prototype

Free SDNs

We use a lot of open source software in our own projects and we also believe it is important to give back to the community to help make the web a better, faster, and more secure place. While there are a number of fantastic premium CDN solutions you can choose from there are also a lot of great free CDNs (open source) you can utilize to help decrease the costs on your next project. Most likely you are already using some of them without even knowing it. Check out some of the free CDNs below.

10.Discuss the requirements for virtualizationWe use a lot of open source software in our own projects and we also believe it is important to give back to the community to help make the web a better, faster, and more secure place. While there are a number of fantastic premium CDN solutions you can choose from there are also a lot of great free CDNs (open source) you can utilize to help decrease the costs on your next project. Most likely you are already using some of them without even knowing it. Check out some of the free CDNs below.

- Google CDN

- Microsoft Ajax CDN

- Yandex CDN

- jsDelivr

- cdnjs

- jQuery CDN

Virtualization describes a technology in which an application, guest operating system or data storage is abstracted away from the true underlying hardware or software. A key use of virtualization technology is server virtualization, whichuses a software layer called a hypervisor to emulate the underlying hardware

There is a gap between development and

implementation environments

•Different platforms

•Missing dependencies, frameworks/ runtimes

•Wrong configurations

•Version mismatches

11.Discuss and compare the pros and cons of different virtualization techniques in different

levels

Guest Operating System Virtualization

Guest OS virtualization is perhaps the easiest concept to understand. In this scenario the physical host computer system runs a standard unmodified operating system such as Windows, Linux, Unix or MacOS X. Running on this operating system is a virtualization application which executes in much the same way as any other application such as a word processor or spreadsheet would run on the system.

Shared Kernel Virtualization

Shared kernel virtualization (also known as system level or operating system virtualization) takes advantage of the architectural design of Linux and UNIX based operating systems. In order to understand how shared kernel virtualization works it helps to first understand the two main components of Linux or UNIX operating systems. At the core of the operating system is the kernel. The kernel, in simple terms, handles all the interactions between the operating system and the physical hardware. The second key component is the root file system which contains all the libraries, files and utilities necessary for the operating system to function. Under shared kernel virtualization the virtual guest systems each have their own root file system but share the kernel of the host operating system.

Kernel Level Virtualization

Under kernel level virtualization the host operating system runs on a specially modified kernel which contains extensions designed to manage and control multiple virtual machines each containing a guest operating system. Unlike shared kernel virtualization each guest runs its own kernel, although similar restrictions apply in that the guest operating systems must have been compiled for the same hardware as the kernel in which they are running. Examples of kernel level virtualization technologies include User Mode Linux (UML) and Kernel-based Virtual Machine (KVM).

12.Identify popular implementations and available tools for each level of visualization

Data visualization's central role in advanced analytics applications includes uses in planning and developing predictive models as well as reporting on the analytical results they produce.

13. What is the hypervisor and what is the role of it?

Hypervisor. A hypervisor or virtual machine monitor (VMM) is computer software, firmware or hardware that creates and runs virtual machines. ... The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems.

14.How does the emulation is different from VMs?

Virtual machines make use of CPU self-virtualization, to whatever extent it exists, to provide a virtualized interface to the real hardware. Emulators emulate hardware without relying on the CPU being able to run code directly and redirect some operations to a hypervisor controlling the virtual container.

A specific x86 example might help: Bochs is an emulator, emulating an entire processor in software even when it's running on a compatible physical processor; qemu is also an emulator, although with the use of a kernel-side

kqemu package it gained some limited virtualization capability when the emulated machine matched the physical hardware — but it could not really take advantage of full x86 self-virtualization, so it was a limited hypervisor; kvm is a virtual machine hypervisor.

A hypervisor could be said to "emulate" protected access; it doesn't emulate the processor, though, and it would be more correct to say that it mediates protected access.

Protected access means things like setting up page tables or reading/writing I/O ports. For the former, a hypervisor validates (and usually modifies, to match the hypervisor's own memory) the page table operation and performs the protected instruction itself; I/O operations are mapped to emulated device hardware instead of emulated CPU.

And just to complicate things, Wine is also more a hypervisor/virtual machine (albeit at a higher ABI level) than an emulator (hence "Wine Is Not an Emulator").

15.Compare and contrast the VMs and containers/dockers, indicating their advantages and disadvantages

Comments

Post a Comment